Pageturner: Insight Data Science Project

I recently completed the Silicon Valley Insight Data Science Fellowship program, part of which involved quickly developing an app and then demonstrating it at various companies in the area. For this, I developed a first-pass version of a Pageturner app which listens to the user play music and scrolls or turns the pages of sheet music appropriately, and can also find and display sheet music from a library based on what the user is playing.

Background and Overview

There are three major algorithmic components to this app: (1) auditory note recognition, (2) optical musical notation recognition, and (3) sequence alignment (with errors). The auditory note recognition component is based on a previous exploration described in an earlier blog post, but with various changes to the underlying model used, and technical changes to allow it to use the HTML5 microphone api and also to perform the recognition task entirely in the browser (to avoid latency problems with slow internet connections). The optical musical recognition component relies heavily on the existing tool Audiveris, with just some minor additional processing to locate the measures in the original image file. As such, the main challenges in building the demonstration Pageturner app were in developing the sequence alignment component and in putting all of the pieces together into a functioning web-based app.

Recorded Demo

In the live demo, I describe the sequence alignment algorithm in some detail and pull out a melodica for some real-time playing and following. (If you are interested in seeing the demo in person, email me.) The recording below gives a bit of a sense of the playing and following part of the demo.

General Overview Schematics

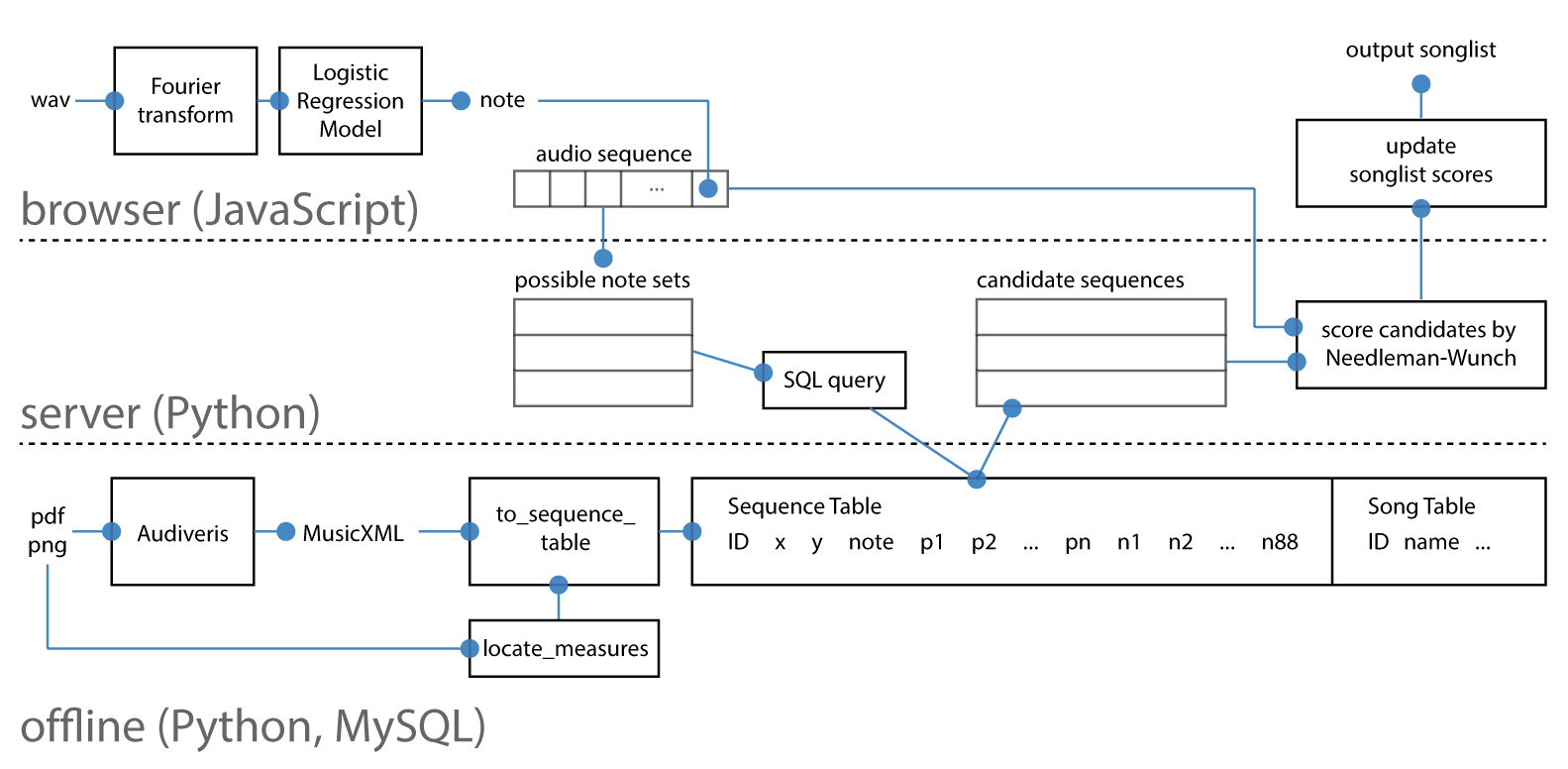

The figure below outlines the various processes performed on the frontend, backend and offline, for the case of song recognition.

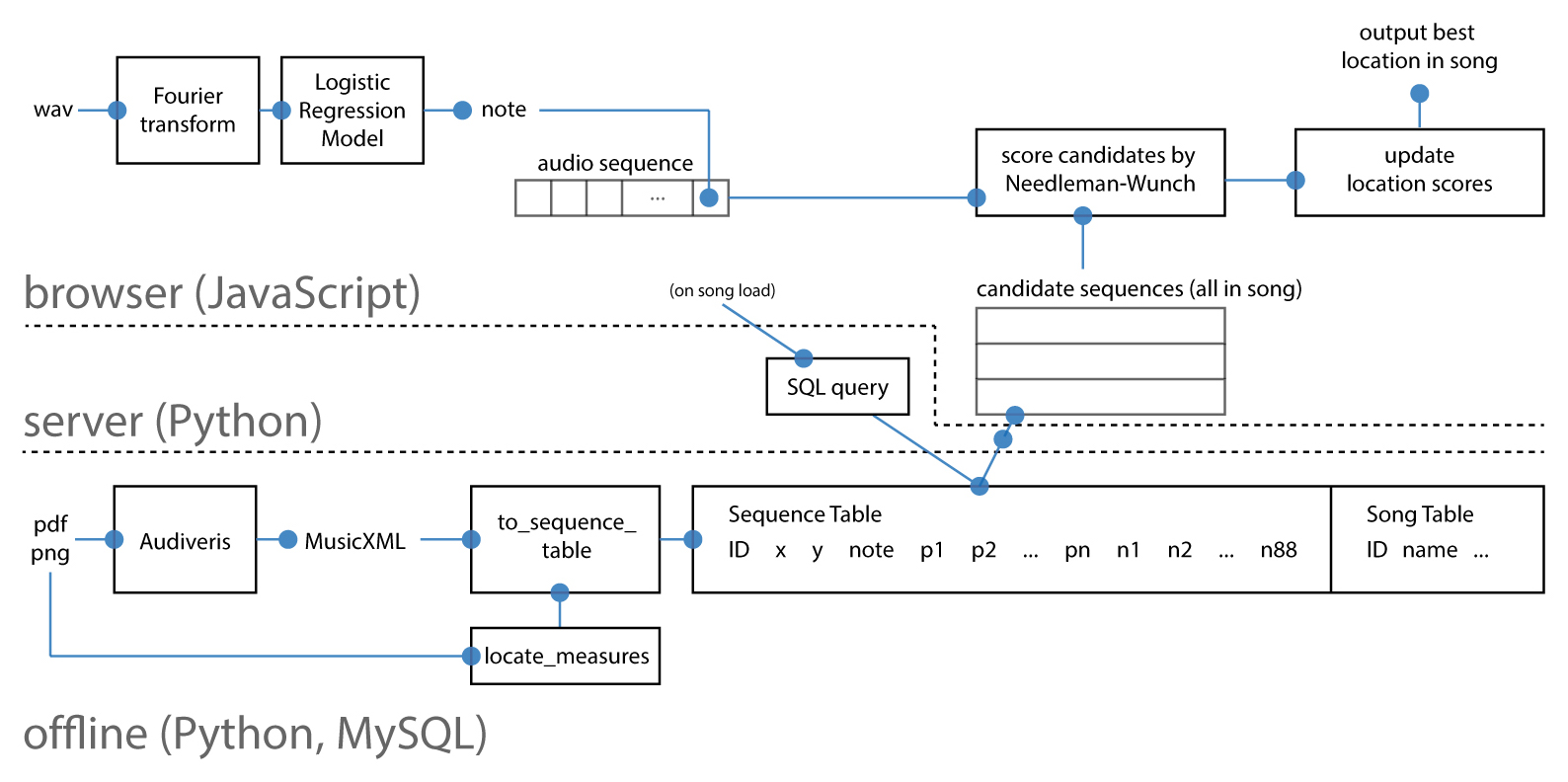

And the next outlines the same for the case of note following. Note that the logistic regression model is coded out on the frontend in both cases, but the Needleman-Wunch algorithm is on the backend in the former case and on the frontend in the latter.

Next Steps

The demo app has been well received by audiences, and there does seem to be some demand for an app like this among musicians. The next step will be to do some more extensive market research to determine if further closed-source development (likely in the form of a tablet app) is warranted. If no, then I will switch the github repo from private to public and provide a better description of how it works. If yes, then I will dig in for more development (and will perhaps be looking for investors and ios developers to join me).